ETL Data Loading Techniques to Meet Deadlines

In the realm of data processing, meeting deadlines is paramount, especially in time-sensitive projects. The Extract, Transform, Load (ETL) process plays a crucial role in data integration and warehousing. To ensure efficient and timely data loading, it’s essential to employ effective techniques at each phase of the ETL pipeline. Let’s explore some strategies to optimize ETL jobs and meet project deadlines effectively.

Efficiently design ETL jobs by optimizing data flow and minimizing unnecessary transformations. Utilize appropriate data flow patterns and stages to streamline the ETL process, enhancing performance and reducing processing time.

Instead of loading entire datasets repeatedly, implement incremental loading techniques to load only new or changed data records. This approach reduces processing overhead, minimizes manual efforts, and expedites the data loading process.

Divide the data loading tasks into smaller units and execute them concurrently using parallel processing. By harnessing multiple processors or threads, parallel processing significantly reduces loading time and maximizes system resources.

Parallel data flows and workflows

During parallel execution of data flows and workflows, SAP Data Services coordinates the parallel steps, waits for all steps to complete, then starts the next sequential step.

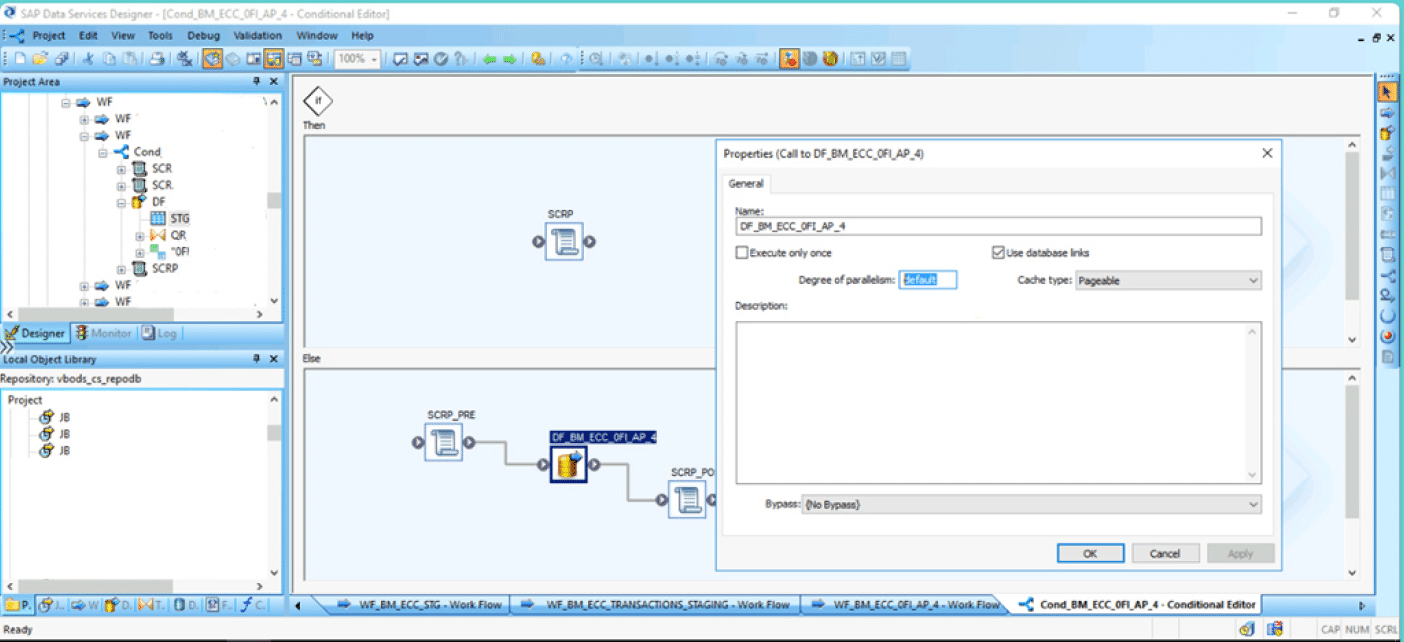

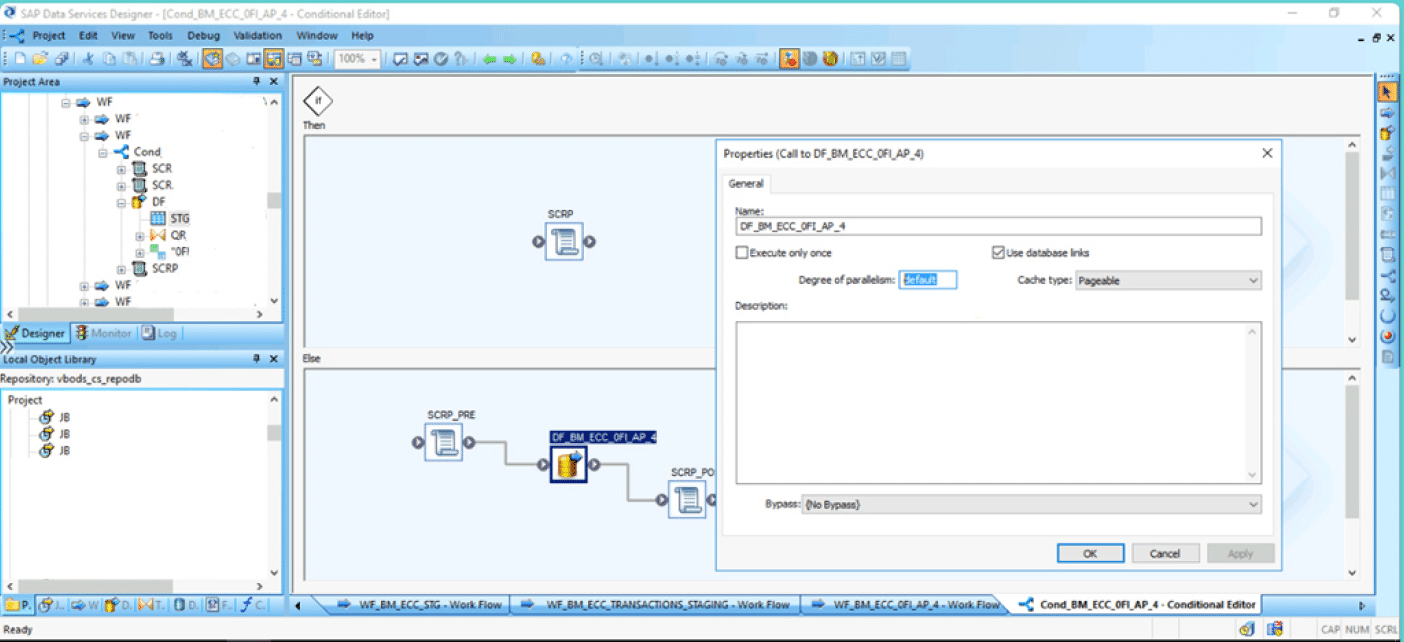

Parallel execution in data flows

Configure parallelism settings appropriately to make the best use of available system resources and reduce loading time. Open the Data Flow tab in the object library of SAP Data Services Designer. Right-click the applicable data flow and select Properties. Enter a number in the Degree of parallelism option.

Ensure data integrity by implementing robust data quality checks within ETL workflows. Leverage built-in techniques like Address Cleanse, Data Cleanse, and Geocoding in SAP BODS to validate and cleanse data during transformations, reducing errors and improving data accuracy.

Optimize data loading performance by creating appropriate indexes on target tables and partitioning large datasets. Indexes expedite data retrieval, while partitioning facilitates parallel loading of smaller data subsets based on specified criteria, such as date ranges or geographical regions.

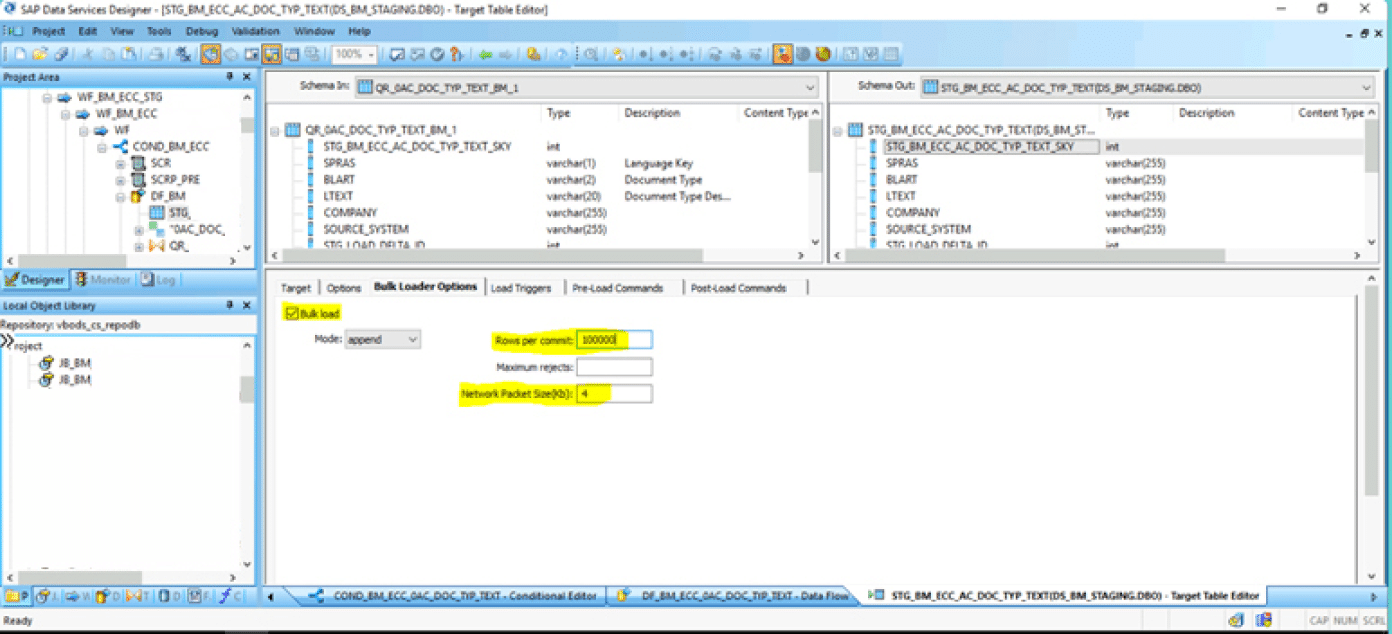

Utilize bulk loading techniques supported by SAP BODS to load data in large batches efficiently. Bulk loading is faster than row-by-row processing and helps meet tight deadlines by accelerating data transfer to the target system.

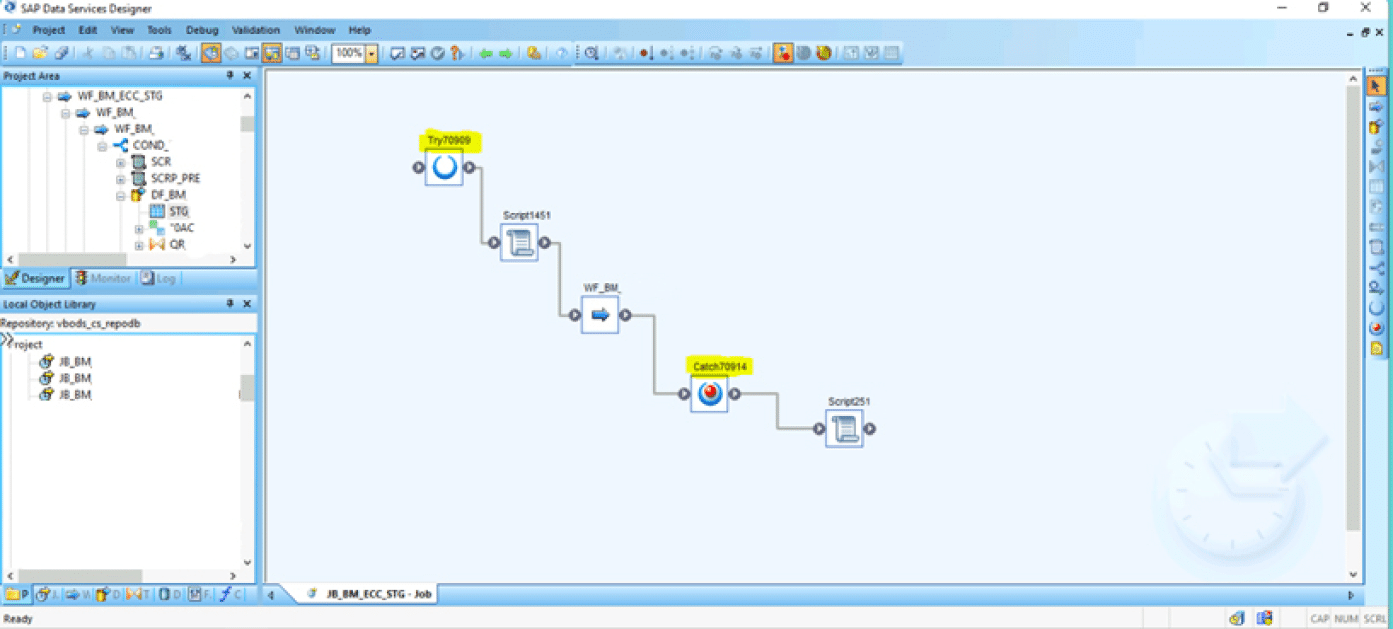

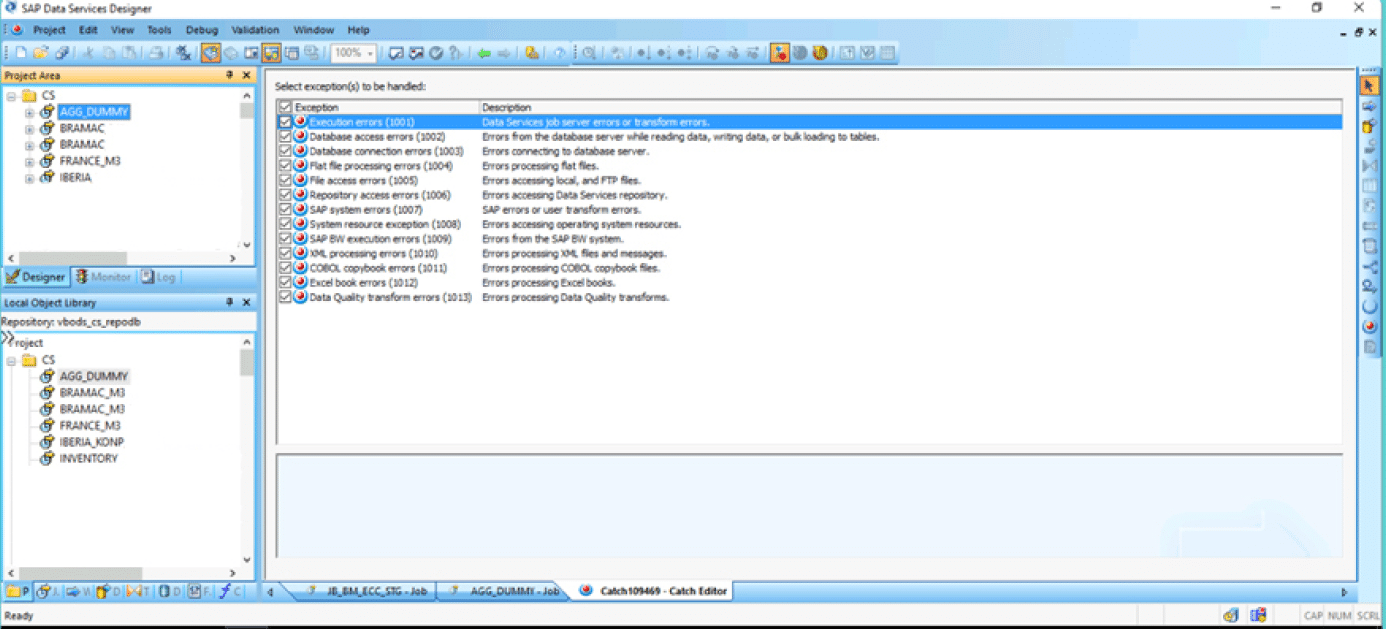

Implement robust error handling and logging mechanisms to capture and address errors promptly. Effective error management minimizes downtime and ensures timely resolution of issues, enhancing overall job reliability.

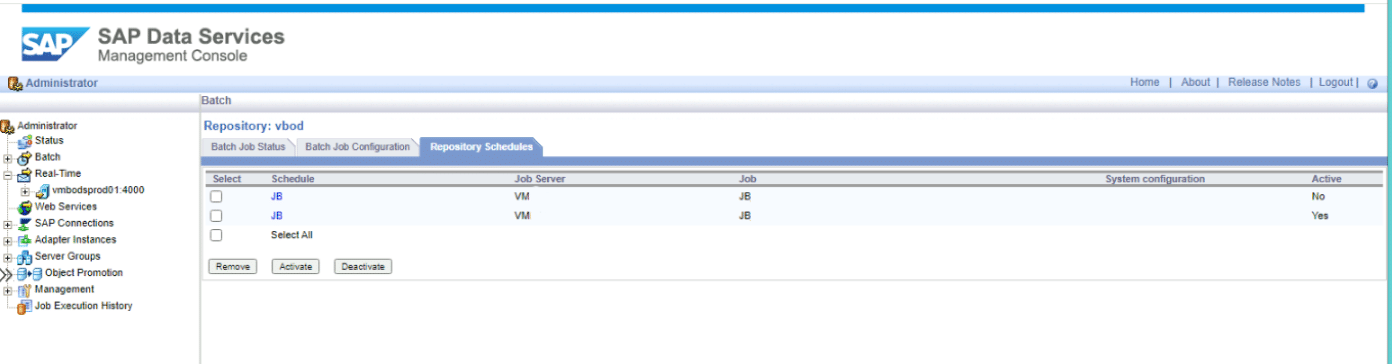

Schedule BODS jobs to run during off-peak hours or low system activity periods to maximize resource availability and minimize interference with critical processes. Automated scheduling based on load requirements optimizes job execution and supports deadline adherence.

Monitor and manage system resources (memory, CPU, disk I/O) utilized by BODS jobs to prevent performance bottlenecks. Allocate adequate resources based on job requirements to ensure smooth execution and timely completion of data loading tasks.

By implementing these advanced techniques and best practices in ETL data loading, organizations can optimize performance, reduce processing time, and consistently meet project deadlines. Efficient data loading not only enhances operational efficiency but also supports data-driven decision-making and business agility.

About the Author

Aditya Raparla

Senior Software Developer

About the Author

Aditya Raparla

Senior Software Developer